Op-Ed Column

Excellence in Software

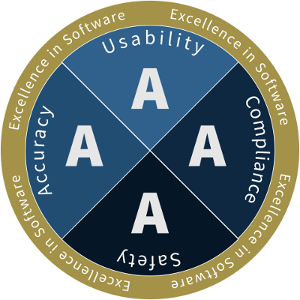

Accuracy · Usability · Safety · Compliance

by Joe Honton

by Joe Honton

Every once in a while we come across a truly delightful piece of software: one that lets us accomplish something significant, that's intuitively clear to operate, that safely and privately handles our needs, and that complies with industry standards and legal requirements.

We can easily recognize the excellent quality of such software. Every aspect of its inner working is intentionally created to meet specific goals; every feature serves a purpose and works correctly; nothing distracts from the task at hand. In short, software of this type just works.

There was a time when the phrase "easy to use" was a distinguishing mark of excellence. It separated new, graphically designed interfaces from older, inscrutably formatted layouts with hard-to-decipher codes. There was also a time when posting a notice such as "this site best viewed with _____," at the bottom of a web page was good enough to say "we're done". And there was a time when simply producing bug-free software was the high-bar goal that we set for ourselves.

But we've grown up, and these statements are no longer acceptable. We need to reach higher. We need to be rigorous in our assessment of the software we create.

To start with, we shouldn't be waiting until the tail-end of our project to define our quality goals. Rather, the product team — including managers, designers, graphic artists, programmers, and testers — should be setting those goals from the outset of the project. And in today's world, those goals should be measurable and should cover much more than just the outward-facing aspects of the software.

As in industry insider, I've watched software quality standards rise and fall over the past four decades. Sadly, we are not at the top of our game anymore.

Much of today's software may look good on the outside, but that veneer may be covering up serious issues. How else can we explain the massive data breaches that have become so commonplace? How do we justify the common practice of purging bug lists instead of fixing the bugs? How do we account for brand new software that older people and those with physical impairments struggle to use? How do we reconcile our needs for privacy when software collects so much personal information? If we are serious about quality, and want to reap its rewards, we need to consider these types of failures.

So here's a way to think about it — a way that uses explicit goals, and the evaluation of progress towards their attainment. Let's call it "Excellence in Software".

It works through self-examination, and everyone involved in the design and development of the software should play a part. For it to be most effective, a series of acceptance criteria are developed at the outset of the project and refined as the project grows. All answers are pass/fail. Periodically, the software under development is evaluated against these acceptance criteria and the answers are tallied.

Scoring the examination is similar to scoring classwork where the grade for each of the four sections is A, B, C or D. Each section starts with a perfect A, but only gets to keep it if all of the criteria for that section are fulfilled. Each section's score is reduced one grade — to B or C or D — for each criteria that is wholly or partially unmet.

The "Excellence in Software" score that all software should strive for is "AAAA". This is tough. Only the very best will achieve it.

The acceptance criteria to use depends upon the type of software under development. For many apps and websites the criteria would be close to the following, with items grouped into four sections: accuracy, usability, safety and compliance. Consider these to be a good starting point.

Accuracy. These are the classic measures of bug-free software.

- Does the software consistently produce correct results?

- Does the software gracefully handle situations with missing, invalid or extreme input data?

- Does the software faithfully save and restore user data?

- Do sorting routines correctly work with foreign language data?

- Do search routines find and filter results in a way the user expects?

- Do summary records always reflect the true sum of the details?

Usability. These are measures of how well the software accommodates users with different levels of sophistication; users having older hardware; and users with less-than 20/20 health.

- Does the software work on every device and browser used by the top 98% of users?

- Does the user interface have readily available hints and prompts to help new users understand and operate each aspect of the software?

- Are form validation messages given to the user in a place and manner that is obvious?

- Do background colors and text colors have sufficient contrast to be readable?

- Does informational feedback still work for people with protanopia (red-green color blindness)?

- Do web components and generic HTML tags have appropriate WAI-ARIA roles assigned?

- Does the software accommodate screen readers by numbering HTML

tabindexattributes in a most-significant to least-significant order?

Safety. These are measures of how well the user's data is protected from accidental loss, bad actors, and catastrophic failures.

- Does the data backup schedule provide a stable snapshot of everything at hourly, daily, and weekly intervals?

- Can the data restoration process be completed within two hours?

- Is the catastrophic data storage facility in a separate location from the online data?

- Are user credentials properly encrypted during storage and during network transmission?

- Is multi-factor authentication triggered when access is requested from a new location?

- Are web servers configured to use up-to-date TLS protocols?

- Are the website's Content Security Policy (CSP), Feature Policy, and Referrer Policy properly set up to detect and report breaches?

- Are sequential records protected from brute force and replay attacks?

- Are user interface redress attacks prevented with proper use of the

frame-ancestorsCSP header directive? - Is user input sanitized to catch cross-site scripting injections when saving data to the database?

- Are financial transactions protected with the use of HMAC-based cross-site request forgery tokens?

- Have all files been scanned for viruses before deployment?

- Are DevOps' server credentials stored separately and outside project repositories?

- Does the project's testing protocol include explicit checks for common data breaches?

- Are web server access logs regularly monitored for abnormal conditions?

Compliance. These are measures of the software's adherence to industry standards and civil law.

- Do the website's documents use HTML and CSS in a way that passes W3C validators?

- Do browsers evaluate the website's JavaScript without generating console warnings or errors?

- Does the website have a data privacy policy that complies with the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA)?

- Does the software's eCommerce process comply with Payment Card Industry Data Security Standards (PCI DSS)?

- Does the software comply with the Access to Information and Communication Technology (ICT) Section 508 standards for government sites?

- Does the software adhere to the terms and conditions of each third-party software license it uses?

- Are third-party software libraries that are distributed under GNU or MIT licenses properly acknowledged on a public-facing document?

- Does DevOps have a written schedule of expiration and renewal dates for all limited term licenses?

- Has the company established a vulnerability disclosure policy with a way for outside security researchers and bounty hunters to privately provide notification of issues?

These and others may be applicable. Consider them to be a starting point for your project. Remember, each software project should develop their own set of self-evaluation criteria.

Excellence in Software can only happen with intentional effort and careful attention to detail.